The practice of attempting to get AI to act in harmful or unintended ways in order to anticipate the harm the system could do is called 'red teaming.' Here, one of the red teamers for OpenAI's GPT-4 argues that further steps are needed. 📷: Getty

in order to help OpenAI understand the risks it posed, so they could be addressed before its public release. This is called AI red teaming: attempting to get an AI system to act in harmful or unintended ways.consults for funders and companies on AI governance and is an affiliate with Harvard’s Berkman Klein Center and GovAI.

Red teaming is a valuable step toward building AI models that won’t harm society. To make AI systems stronger, we need to know how they can fail—and ideally we do that before they create significant problems in the real world. Imagine what could have gone differently had Facebook tried to red-team the impact of its major AI recommendation system changes with external experts, and fixed the issues they discovered, before impacting elections and conflicts around the world.

Normalizing red teaming with external experts and public reports is an important first step for the industry. But because generative AI systems will likely impact many of society’s most critical institutions and public goods, red teams need people with a deep understanding ofof these issues in order to understand and mitigate potential harms. For example, teachers, therapists, and civic leaders might be paired with more experienced AI red teamers in order to grapple with such systemic impacts.

After a new system is released, carefully allowing people who were not part of the prerelease red team to attempt to break the system without risk of bans could help identify new problems and issues with potential fixes., which explore how different actors would respond to model releases, can also help organizations understand more systemic impacts.

But if red-teaming GPT-4 taught me anything, it is that red teaming alone is not enough. For example, I just tested Google’s Bard and OpenAI’s ChatGPT and was able to get both to create scam emails and conspiracy propaganda on the first try “for educational purposes.” Red teaming alone did not fix this.

Brasil Últimas Notícias, Brasil Manchetes

Similar News:Você também pode ler notícias semelhantes a esta que coletamos de outras fontes de notícias.

Microsoft Security Copilot is a new GPT-4 AI assistant for cybersecurityMicrosoft’s copilot and AI push continues, with security this time.

Microsoft Security Copilot is a new GPT-4 AI assistant for cybersecurityMicrosoft’s copilot and AI push continues, with security this time.

Consulte Mais informação »

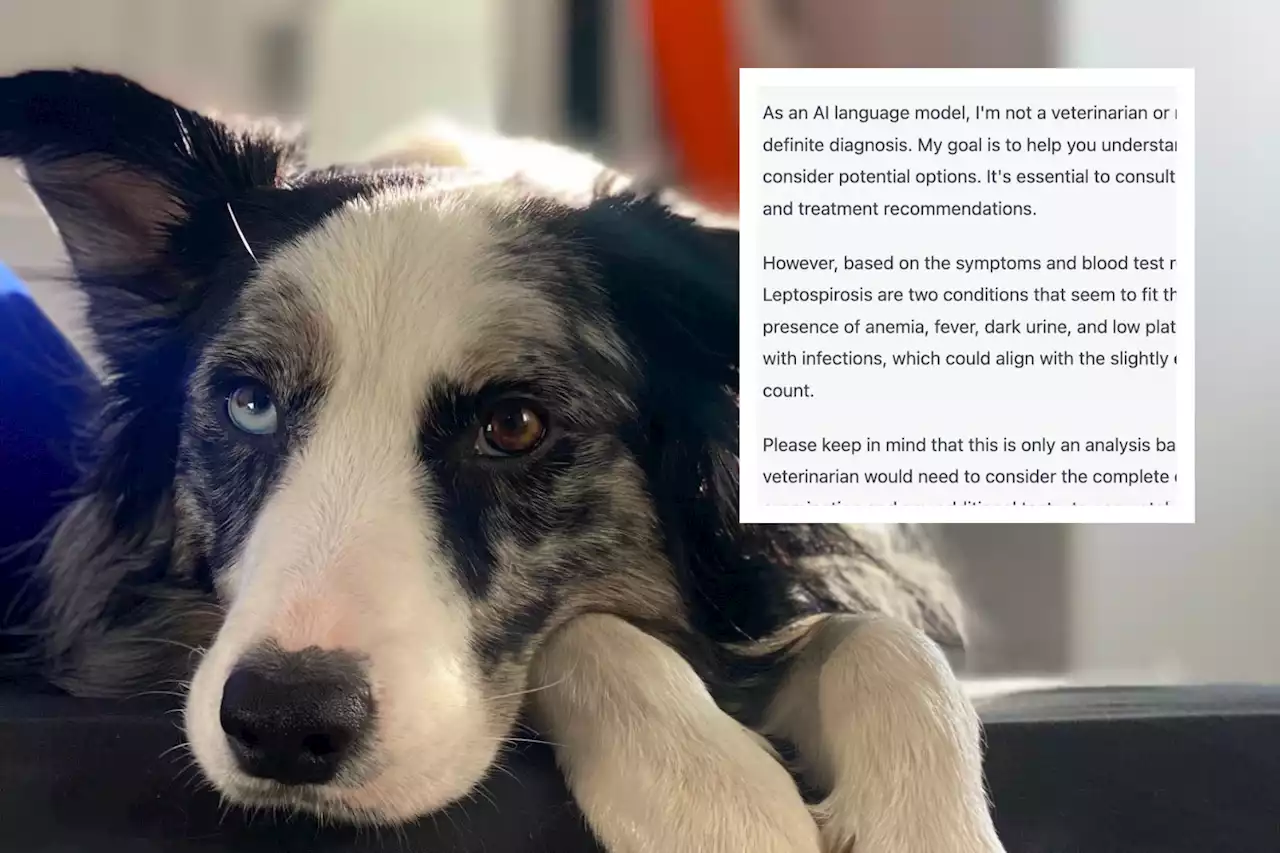

Man details how GPT-4 AI software helped save dog's life'It's by no means a replacement for humans, but rather it could be a splendid tool in the hands of veterinarians,' the dog's owner told Newsweek.

Man details how GPT-4 AI software helped save dog's life'It's by no means a replacement for humans, but rather it could be a splendid tool in the hands of veterinarians,' the dog's owner told Newsweek.

Consulte Mais informação »

GPT-5 could soon change the world in one incredible way | Digital TrendsGPT5 is coming, and it could help launch an AI revolution. But will its incredible new capabilities be a force for good or bad? That remains to be seen.

GPT-5 could soon change the world in one incredible way | Digital TrendsGPT5 is coming, and it could help launch an AI revolution. But will its incredible new capabilities be a force for good or bad? That remains to be seen.

Consulte Mais informação »

Musk, experts urge pause on training of AI systems that can outperform GPT-4Elon Musk and a group of artificial intelligence experts and industry executives are calling for a six-month pause in training of systems more powerful than GPT-4, they said in an open letter, citing potential risks to society and humanity.

Musk, experts urge pause on training of AI systems that can outperform GPT-4Elon Musk and a group of artificial intelligence experts and industry executives are calling for a six-month pause in training of systems more powerful than GPT-4, they said in an open letter, citing potential risks to society and humanity.

Consulte Mais informação »

Musk, experts urge pause on training AI systems more powerful than GPT-4Elon Musk and a group of artificial intelligence experts and industry executives are calling for a six-month pause in training systems more powerful than OpenAI's newly launched model GPT-4, they said in an open letter, citing potential risks to society and humanity.

Musk, experts urge pause on training AI systems more powerful than GPT-4Elon Musk and a group of artificial intelligence experts and industry executives are calling for a six-month pause in training systems more powerful than OpenAI's newly launched model GPT-4, they said in an open letter, citing potential risks to society and humanity.

Consulte Mais informação »

GPT-5 might make ChatGPT indistinguishable from a humanChatGPT’s GPT-5 upgrade will reportedly bring AGI powers or artificial general intelligence that makes it indistinguishable from a human.

GPT-5 might make ChatGPT indistinguishable from a humanChatGPT’s GPT-5 upgrade will reportedly bring AGI powers or artificial general intelligence that makes it indistinguishable from a human.

Consulte Mais informação »